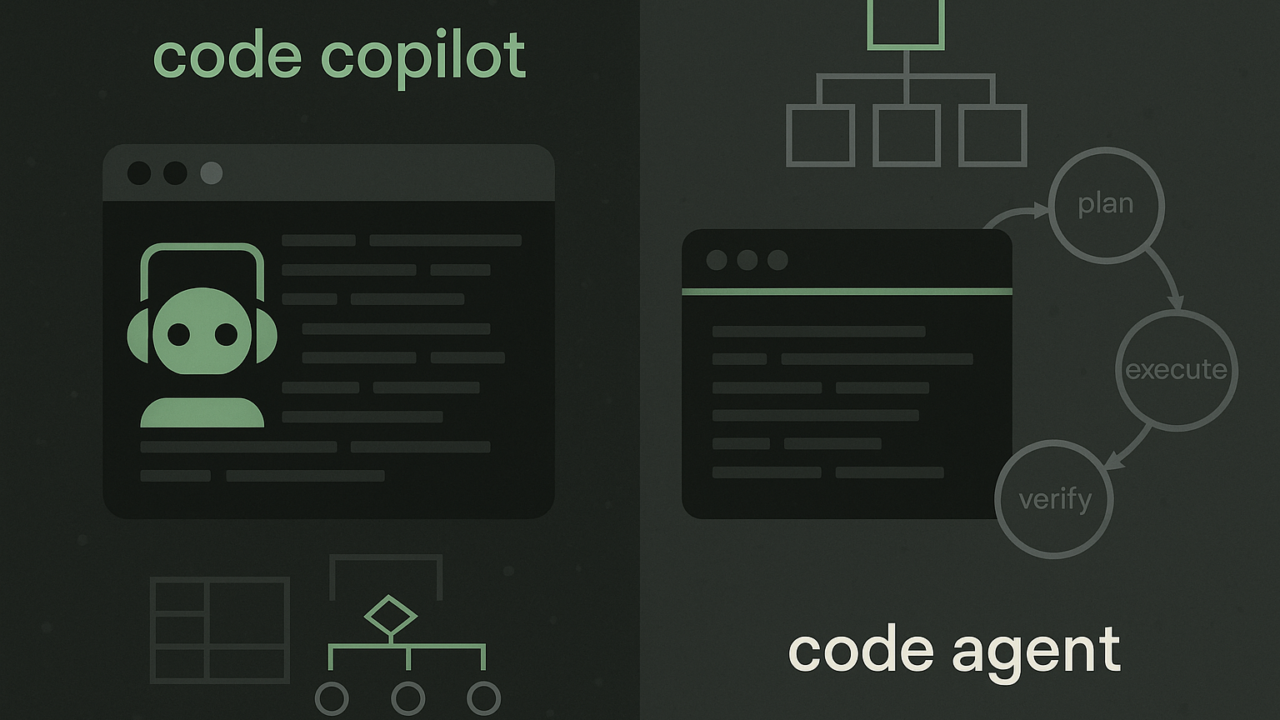

When AI coding assistants first began to appear in developers’ workflows, Daniil Mazepin immediately saw the appeal. API scaffolding, boilerplate code, test generation – tasks that once took hours could now be done in minutes.

“It’s seductive,” he said. “You see this instant boost in output and think – great, we’re more productive. But that’s also where the problem begins. I’ve seen engineers move quickly through tasks without fully understanding the system they’re working in. The tools provide answers before people formulate the right questions. And when that happens repeatedly, you start seeing gaps in judgment, in architecture, and in critical thinking.”

Daniil speaks from experience. He’s led engineering teams at Facebook (Meta), building the Shops e-commerce platform across Facebook and Instagram, and now drives engineering at Teya, a fintech powering payments for SMEs. He’s also a mentor with the Global Mentorship Initiative and ADPList, and a regular conference speaker on engineering leadership and the future of work.

Speed without understanding

AI tools have undeniably changed the pace of software development. For Daniil, the danger is in confusing speed with depth.

“AI solves the ‘what’ incredibly well,” he explained. “But without understanding the ‘why’, engineers aren’t developing the mental models they need. And that depth is what you rely on in emergencies, when trade-offs matter and you can’t afford the wrong call.”

At Teya, the stakes are high. A small bug in payment processing isn’t just an engineering problem – it’s a merchant unable to take a payment. “Speed without understanding,” he said, “isn’t a productivity gain. It’s a hidden liability.”

The silent shift in collaboration

One of the clearest cultural changes Daniil has observed is the drop in spontaneous collaboration. “Before, a junior engineer who got stuck might ask a teammate for help,” he said. “Now, they ask an LLM. The work still gets done – but it happens in isolation.”

This “silent engineer” effect, as he calls it, reduces the natural exchange of ideas and erodes shared context. “The best engineering cultures aren’t just productive – they’re interactive. When you lose those touchpoints, you create silos, and the team’s resilience suffers.”

Mentorship as the counterweight

Mentorship, Daniil believes, is the strongest antidote to this trend. In addition to his leadership work, he mentors engineers around the world. He’s noticed that those who rely exclusively on AI tend to plateau earlier in their careers.

“The engineers who grow the fastest are the ones who ask questions, who challenge feedback, who reflect,” he said. “AI can give you a correct answer, but it can’t teach you how to navigate ambiguity, handle a tricky stakeholder, or mediate a conflict in a code review. AI is a brilliant assistant, but it’s a poor guide.”

Rethinking seniority

If AI tools can make a junior engineer look faster and more capable, what does that mean for the definition of “senior”? Daniil believes the criteria have to change.

“Speed used to be one of the signals,” he said. “Now, AI can make anyone fast. Seniority today is about decision-making, systems thinking, and the ability to raise the performance of the people around you.”

That shift, he argued, means leaders need to adjust how they measure impact. “We have to stop rewarding output alone. We should be valuing the engineers who create clarity, who raise the bar for the team, and who can guide others. Those are things AI can’t do.”

The role of companies in responsible AI adoption

Daniil is clear: companies can’t be passive observers in this shift.

“It starts with culture – do you celebrate speed alone, or do you reward thoughtful solutions?” he asked. “Then it’s about enablement – giving teams space to experiment with AI tools, but also frameworks to reflect on their limitations.”

And governance matters too. “We’ve put more emphasis on peer review and architectural oversight, not because we don’t trust people, but because AI speeds things up – and that can compound mistakes quickly. Guardrails aren’t about control; they’re about protecting long-term quality.”

What keeps him up at night

For all the potential of AI, Daniil worries about what might be lost.

“I fear we’ll lose the essence of what makes great engineers – curiosity, critical thinking, collaboration – because we’re too focused on speed. I worry about juniors shipping fast but burning out quickly, and seniors becoming bottlenecks because others haven’t developed the judgment to take ownership. And I worry that mentorship will feel optional, when in reality it should be foundational.”

Reasons for optimism

Despite these concerns, Daniil is hopeful. “We now have tools that can eliminate repetitive work, accelerate prototyping, and even act as a second brain in early exploration phases,” he said. “That means engineers can spend more time designing better systems, engaging in deeper collaboration, and mentoring others.”

The key, he emphasised, is integration: “AI won’t make teams better on its own. It’s up to us to design environments where it strengthens, not weakens, the human fabric of engineering.”

Closing words

As our conversation wrapped up, Daniil reflected on the advice he gives to junior engineers starting their careers today:

“Use the tools – but don’t skip the hard parts. That’s where the real learning lives. And seek out people, not just prompts. The most impactful careers are built through relationships, reflection, and resilience – AI can’t do that part for you.”