A new International AI Safety Report 2026 has been released, aiming to set a shared baseline for how governments, labs, and enterprises talk about the risks and real-world behaviour of general-purpose AI systems. The report brings together 100+ contributors and is chaired by Yoshua Bengio, positioning itself as a global reference point for what’s changing in AI capabilities, and what’s becoming harder to ignore as deployment accelerates.

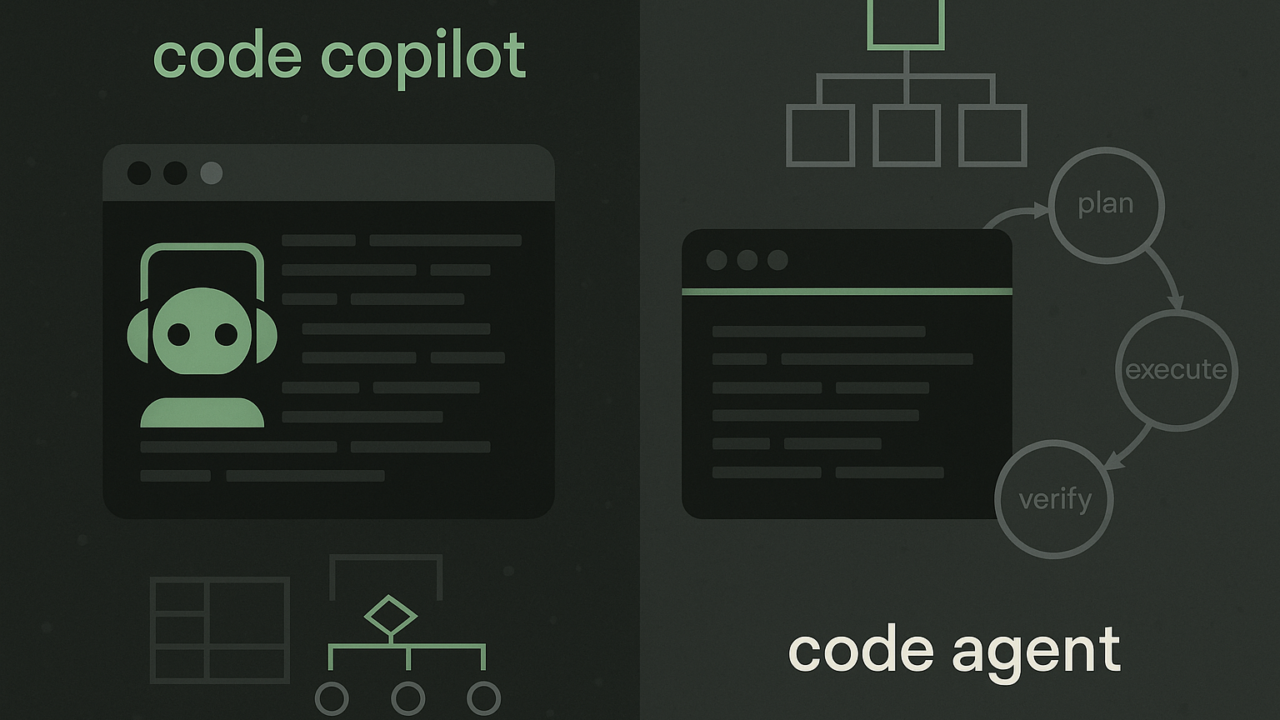

What makes the timing feel urgent is where the industry is right now: LLMs are no longer “experimental assistants.” They’re being embedded into workflows where failure is expensive: customer support, internal knowledge systems, compliance-heavy operations, and security-sensitive environments. That shift puts pressure on a simple question many teams still can’t answer confidently: what evidence actually proves an enterprise LLM feature is safe and reliable, and what is just optics?

The report also highlights a growing tension between public benchmarks and production reality. A model can look strong in controlled testing yet fail in messy conditions: ambiguous instructions, edge cases, adversarial prompts, or unexpected tool behaviour. As more companies adopt AI features at speed, the gap between “demo performance” and “operational reliability” becomes a business risk, not a research debate.

To unpack what “real proof” should look like in 2026, we asked Aleksandr Timashov, an ML engineer and expert in LLM evaluation and reliability, to share what he considers a reasonable bar for safety, and what still feels like “safety theatre.”

Aleksandr’s comment:

“Vibe coding is a good analogy for enterprise LLM safety. It often looks impressive and sounds confident, but quite frequently the generated code does not work. When you point out an error, the model apologizes and produces even more code, and this loop repeats until a human steps in, usually exhausted. That confidence and verbosity should not be confused with reliability. As a result, the basic safety bar for enterprise LLMs does not really change compared to classic ML. You still need offline evaluation, shadow mode, safety logging, and A/B testing against business metrics where applicable.”