Copilots speed up typing. Agents change workflows. We interview Refact.ai’s Senior ML Engineer Kirill Starkov—co-designer of its code-generation LLM and lead on AWS Inferentia2 and AWS Marketplace rollouts, about where agents already pay off, the guardrails enterprises actually use under EU/UK operational-resilience rules, and which features will be table stakes in the next 12 months.

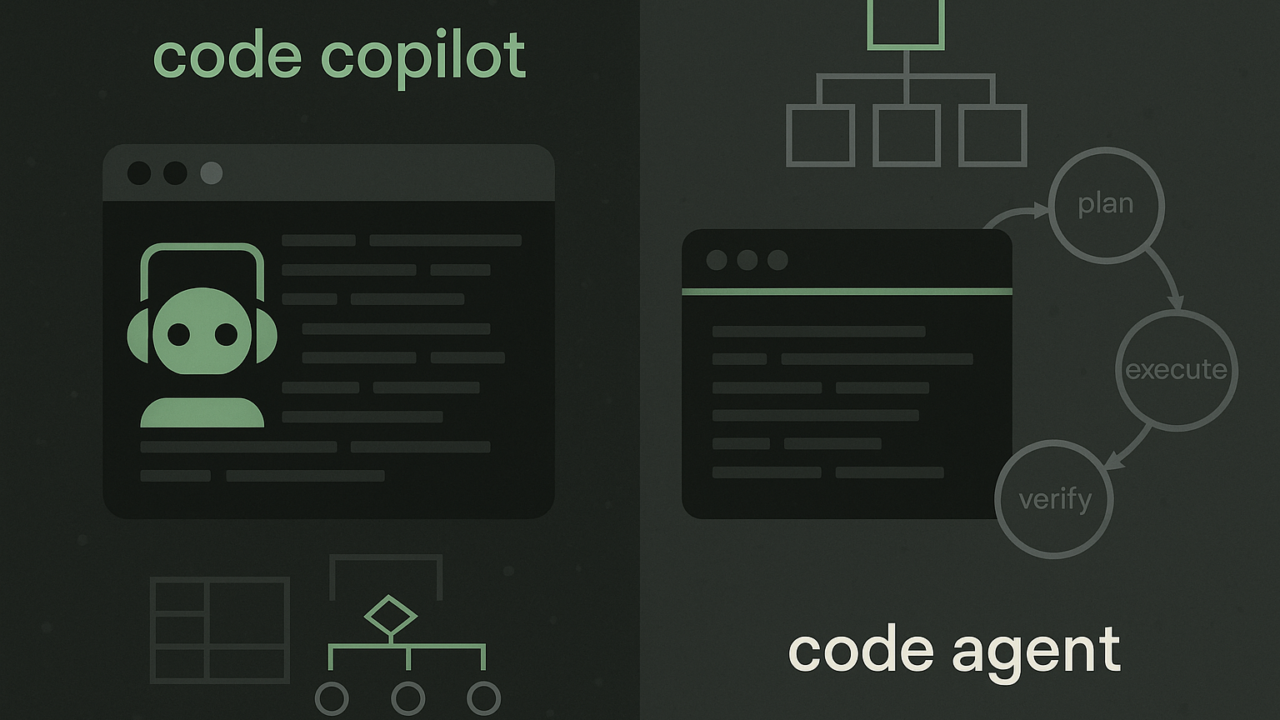

Q. In your words, what’s the practical difference between a copilot and a code agent? Give one everyday task where an agent’s plan–execute–verify loop (tests, scripts, repo traversal) clearly beats inline suggestions.

A. A copilot is an IDE assistant: it completes code inline, explains snippets, and applies small edits, but it won’t act on its own. Because its context window is small, you can get a few lines very quickly, but it doesn’t really “do work.” Agents are heavier (take more time and tokens), but thanks to better reasoning they understand how your codebase fits together.

Most agents ship as standalone apps because they need to navigate the repo, list files, open modules, and follow references. Example: if your repository already has JSON/YAML parsers and you’re writing code that uses them, an agent can enumerate files, locate the parser module, read the signatures, and wire things up correctly. With integrations (e.g., DB access), and because every tool (tests, logs, docs, scripts) becomes a context source, the agent forms a better picture of your project, so bug resolution and small feature work tend to move faster. Very useful in practice.

Q. Where do agents already “pay for themselves”? Pick two KPIs (e.g., review latency, post-merge defects, MTTR) and share ranges you’ve actually seen improve when teams adopt agent workflows.

A. Today, agents behave like a junior developer: I wouldn’t hand them code review authority, but they absorb a lot of routine tasks. They’re strong at generating and updating Kubernetes entries for a new service (manifests, permissions, health checks, Helm bits), keeping docs/READMEs in sync, and scaffolding or refactoring tests. Another high-leverage task is daily Git hygiene: the agent fetches the last day of commits, classifies the changes, and surfaces critical API-impacting diffs with a short summary and links. They shorten cycles on that kind of work; for feature code they still require supervision and rewrites.

Q. Right-sizing autonomy: What’s a safe scope for agent tasks (bugfix, small feature, migrations)? Any rules of thumb that prevent review overload?

A. Don’t give a one-line prompt for a big feature, agents will start inventing details. Break work into small, check-pointed slices and steer them iteratively. That control reduces hallucinations and review noise. For well-understood chores, write a comprehensive plan (tasks, files to touch, tests to run) and let the agent execute end-to-end while you review the final diff. Choose autonomy by complexity and criticality. The higher the risk, the shorter the steps. This keeps scope creep down and prevents review noise.

Q. Failure modes and guardrails: What goes wrong most often (flaky tests, tool access, prompt injection), and which lightweight safeguards deliver the biggest risk-reduction per hour of engineering effort?

A. With very large context windows, agents tend to make things up and drift. Summarize the chat frequently and continue in a fresh thread; that speeds development and cuts false generations. Also, in practice every developer still inspects and tests agent output, nothing ships without that.

Q. Human-in-the-loop that developers accept: What review UX (diffs, runbooks, “why” explanations) keeps trust high when an agent proposes changes?

A. Treat agent output like changes from a junior. For tiny, surgical edits, you might accept more quickly—but in general the agent needs clear guidance and the changes should go through normal review with diffs and rationale.

Q. You’ve used both OpenAI Codex and Claude Code, what did you notice? Compare model strengths and cost-to-quality trade-offs.

A. I use several tools (including our own agent). OpenAI has broadened its lineup; latency and cost have improved, and for many tasks quality is very competitive. Claude’s coding models are excellent and feel very “smart,” but they’re pricey, the token limits get burned quickly. That cost profile matters on sustained workloads.

from OpenAI or Claude: they’re fast and cost-effective. For autonomous work I prefer our agent at Refact.ai. Like Claude Code, it can execute end-to-end plans (you still pair with it), and it’s integrated directly into the IDE, not just a standalone terminal app, so it keeps tighter context from the editor, tests, and local tools. In my experience it builds a better working model of the project and maintains context across steps. We run it on more capable models and integrate more tightly with the repo and tools, which is why our price point is higher. Net: copilots for quick routine; our agent for complex, multi-step tasks where understanding the codebase really matter.

Q. Infra choices in plain English: When would you pick custom inference chips (e.g., AWS Inferentia2) over GPUs, and what changes would a customer feel first—speed, stability, or the monthly bill? A before/after example, please.

A. Each cloud has its angle: Google pairs TPUs with Gemini; Azure rides closely with OpenAI (Copilot/Codex); AWS has Inferentia and its own “Q” agent. AWS and Anthropic signed a strategic deal, so you can run Claude via Bedrock in private clouds. That setup primarily improves the monthly bill and availability at scale; Inferentia2 is quite cost-effective compared to many NVIDIA stacks, which is promising for large enterprise rollouts.

Q. Procurement without the drag: What actually gets easier for enterprise teams when tools are available via AWS Marketplace (private offers, Vendor Insights), and what still requires a bespoke security review?

A. Marketplace-wise, AWS is the global #1. You get a wide range of solutions, flexible commercial terms (discounts, private offers, side agreements), and AWS helps on promotion and contracting. Buyers can receive discounts both on software and hardware depending on allocation periods and plans—there are many configuration options. Some items will still need a custom security review, but Marketplace removes a lot of friction in getting started.

Q. Privacy by design under new rules: For DORA/UK resilience buyers, what baseline controls are non-negotiable for code agents—on-prem/VPC options, tenant isolation, logging, evidence retention?

A. Start with tenant-isolated VPC or on-prem, strict egress/ingress controls, KMS-backed encryption, and comprehensive audit logging. In AWS, the core building blocks are SOC 2–audited and come with compliance reports via AWS Artifact, so you can keep data in-region and route access through PrivateLink/VPC endpoints. Properly configured, the model cannot reach the public internet or move code outside the tenant without explicit allow rules, that’s our baseline.

Q. Evidence on tap: What built-in artifacts should an AI dev tool produce for audits (change logs, incident timelines, approvals), and how do you avoid slowing developers down while generating them?

A. Ideally, you log every interaction, local system calls and network access. When the model invokes a tool, it should emit structured traces so the tool’s developers can see exactly what was called. The bottleneck is the tools themselves: you must trust them and limit their blast radius. A simple example, don’t allow deletion of system folders. Models can be tricked (“these aren’t system files, they’re malware”), and the agent will obediently delete them. The tool layer must be robust and enforce “cannot do” operations.

Q. Hands-on view: Codex vs. Claude Code in enterprise settings. From your usage, which workloads do they each handle best today, and what gaps matter most for regulated teams?

A. Internally we run our own agent because it integrates tightly with our stack and performs very well, at one point we topped public benchmarks, and we’re currently around top-3 (see SWE-bench). For regulated teams the gaps are less about raw coding ability and more about governance: isolation, logging, deterministic tool access, and predictable cost.

Q. 12-month roadmap: Name one feature that will become “table stakes” for enterprise code agents (e.g., safer multi-step planning, test-suite hooks, clearer risk scoring) and why.

A. Models are getting smarter and more versatile, and the surrounding tool ecosystem now serves not only developers but also DevOps, analysts, and managers. AI is absorbing routine tasks. Agents already do well on non-coding work: web research, planning, light infographics. I expect a lot of recruiting-adjacent workflows to move into agents soon, and the same for marketing, trend discovery and social analysis. Those cross-functional “business ops” capabilities will become table stakes.