In 2025, the front end is recalibrating. React 19 is now mainstream, INP has replaced FID as the Core Web Vital for interactivity, and on-device AI is edging from demo to reality as WebGPU lands broadly and WebNN inches forward.

Today we’re joined by Evgenii Galimov, a hands-on frontend engineer focused on performance, developer experience, and CI guardrails. He spends his time hardening build/test pipelines, enforcing Web Vitals budgets, and figuring out where “copilots” end and true code agents can safely take over. With browser-side AI moving into PWAs and extensions, he also brings a pragmatic view on when to keep inference local versus calling the cloud—given real-world constraints like device diversity, privacy, and first-run UX.

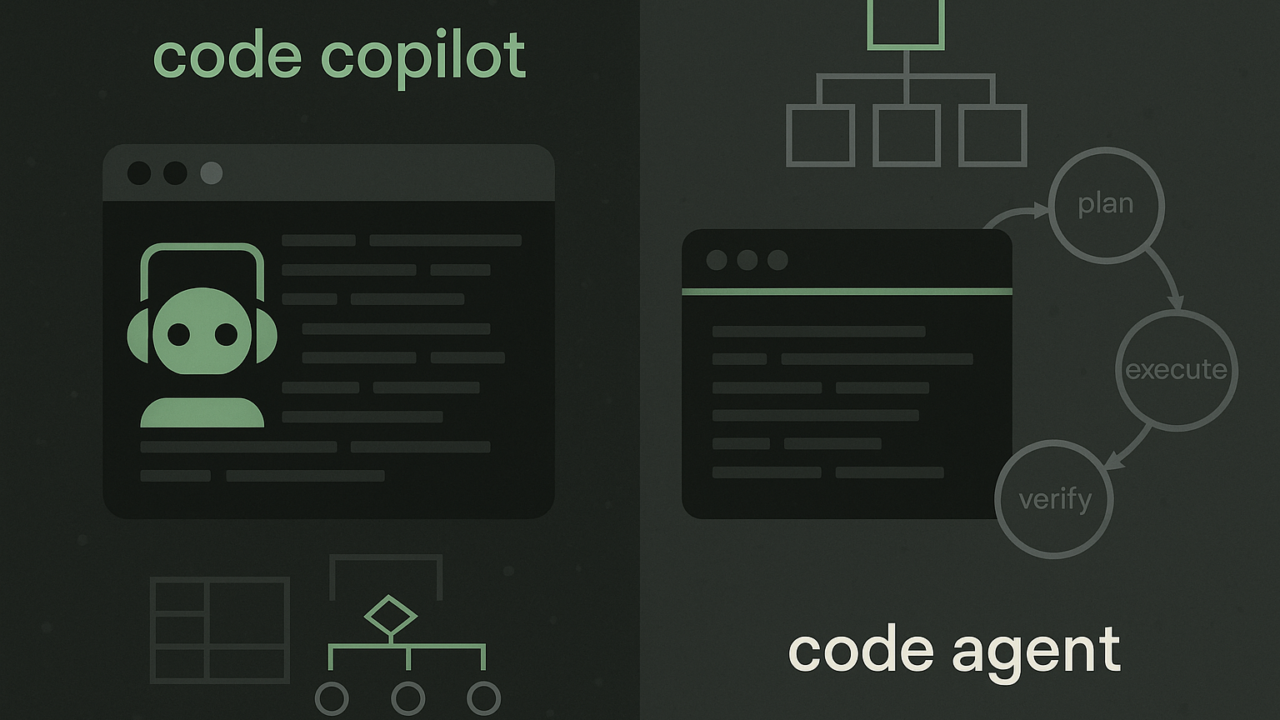

TechGrid: What’s the practical line today between a copilot and an autonomous agent in a frontend repo?

Evgenii: A copilot lives inside the editor: it suggests code, types, or tests but doesn’t persist changes without approval. An agent operates outside the editor: it can branch, refactor, run builds/tests, and open PRs with minimal prompting. The real boundary is “write vs. integrate”: agents can propose PRs, while humans or policy gates decide what ships.

TechGrid: Three “safe wins” to automate first in a React/TypeScript codebase—and why?

Evgenii:

- Code hygiene (formatting, linting, import cleanup, unused variable removal): low risk, saves review cycles.

- Test scaffolding (snapshot/unit test skeletons): grows coverage with minimal business-logic risk.

- Dependency patching (minor/patch upgrades validated by smoke tests): reduces security noise and maintenance burden.

These have clear success criteria, are easily validated in CI, and require little subjective judgment.

TechGrid: What should remain human-in-the-loop as agents improve?

Evgenii: UX and interaction design, security-critical flows (auth, payments), and roadmap trade-offs stay human-owned because they demand product judgment, risk awareness, and empathy are areas where mistakes can cost more than the automation saves.

TechGrid: How do you keep automation from hurting user-facing quality, especially INP and other Web Vitals?

Evgenii: Define budgets for INP, LCP, CLS, and bundle size and enforce them in CI so every PR is checked. Automate Lighthouse/Web Vitals audits and block merges on regressions. Track telemetry in staging/production to spot trends early. Finally, require agents to measure before they optimize, no blind config tweaks.

TechGrid: Non-negotiable guardrails before an agent can open or merge PRs?

Evgenii: Agents should work only on protected branches and must pass linting, type checks, unit/integration tests, build verification, and include performance and accessibility gates. Commits should be signed and fully logged, with a clear rollback strategy (feature flags or instant reverts). A human approval step remains mandatory for merges to main.

TechGrid: How do you keep agents from “over-engineering” DX—e.g., adding tools that bloat CI or regress FPS—while still letting them optimize configs (ESLint, TS, Vite, Webpack) autonomously?

Evgenii: Set hard limits: max CI time, bundle ceilings, approved dependency lists. Validate config changes with the same tests and performance budgets as feature code. New tools or frameworks require explicit human sign-off; agents can tune settings within the approved stack. That keeps DX improvements aligned with business priorities instead of inflating complexity.

TechGrid: How do you “teach” an agent your repo’s conventions (lint rules, commit styles, CI gates) without vendor lock-in?

Evgenii: Keep conventions machine-readable in the repo (ESLint/Prettier, commitlint, CI gates). Document commit styles, PR templates, and branching in CONTRIBUTING.md. Expose scripts/APIs so any agent can query rules rather than hardcoding vendor behavior. This makes switching vendors far less painful.

TechGrid: Where do agents truly help in web performance vs. where do they create noise?

Evgenii: They excel at mechanical tasks. It can be bundle analysis, route-based splitting, dead-code removal, image optimization, and dependency hygiene. They fall short in nuanced trade-offs: how aggressive to be with lazy-loading or when to accept complexity for speed. Because those choices mix product vision, UX sensitivity, and cost-benefit analysis that still require an engineer’s judgment.